On this page, I describe the various technologies I use on a regular basis and my reasons for using them. These same reasons may not apply to you, but hopefully my rationale helps you make a more informed decision.

For context, I started my career as a full-stack software engineer with supporting roles in designing and maintaining a wide variety of Linux-based systems. I’ve designed and built numerous object-oriented libraries, databases, end-user applications, and back-end systems during my career. I have around two decades of programming experience with about 10 years of that focusing on Natural Language Processing.

Programming Languages

Python

No programming language is perfect, including Python, but I find it to be easy to read, simple, and fairly elegant. It can be slow at times and suffers from a having a lot of technical debt at this point. In particular, the tooling and packaging ecosystem has been very confusing to newcomers for quite some time.1 2 It can also be difficult to parallelize operations on the CPU. But the huge number of powerful libraries, including many mentioned elsewhere on this page, makes it the go-to language for many use cases, including for deep learning and other NLP tasks.

2 Also, by “newcomer”, I mean nearly everyone, myself included

The addition of static typing to the language in recent years has been a game-changer for me. Python has also made notable advances in addressing the overall sluggishness of the interpreter, but is fundamentally limited by some early design choices. Python is a very high-level, abstracted language and getting fine-grained control over low-level implementation details is often impossible without dropping into other languages. I’m extremely excited by the potential of Mojo to address these limitations without losing the simplicity and elegance of Python.

R

Traditional statistical analysis in Python is not very ergonomic, making it difficult to iterate quickly. Most of the most advanced techniques, including math/stats research, are more widely available in R.

I find the R language itself somewhat clunky, but the tidyverse suite of tools by Hadley Wickham makes it significantly more pleasant to use. And producing publication-ready graphics with ggplot2 is often easier than with Python. If I need to do some sort of ad hoc statistical analysis, I’m likely to reach for R.

SQL

I believe SQL is an essential language for anyone working with data. While not a general-purpose language, it’s the best tool for working with relational databases. When first learning SQL, I found it a bit awkward due to it being a declarative language. But I’ve used it so many times over the years in so many different situations that it was well worth the investment in learning it.

There are numerous graphical alternatives to SQL that help make queries more point-and-click, sometimes the only way to get the data you need is to hand-write a SQL query. Familiarity with it also helps in addressing slow database queries, perhaps by restructuring the query itself or adding an index.

The language is extremely stable so there’s rarely much new to learn once you’re familiar with it.

TypeScript

I’m not the biggest fan of JavaScript—it’s almost too forgiving and there are often many options for how to accomplish a particular goal. But if you’re writing any type of web front-end it’s an essential language to know. I personally prefer TypeScript because adding types to JavaScript makes it much easier to understand and maintain.

Natural Language Processing (NLP)

spaCy

spaCy is a Python library that helps you build applications that process and understand large volumes of text. It provides features such as tokenization, part-of-speech tagging, named entity recognition, and dependency parsing. Some well-performing language models for different languages and with different levels of performance come included. spaCy makes many common NLP tasks easy to implement with a high likelihood of successfully getting a model into production.

While the library does have limitations and I’m more frequently needing to implement language models that require PyTorch or something similar, spaCy handles the majority of common NLP tasks. It also integrates well with other libraries, including PyTorch and HuggingFace, so needing to use these alternatives is not a problem.

PyTorch

PyTorch is a Python deep learning framework that supports NLP along with nearly any other type of deep learning model. It’s incredibly flexible and performant. PyTorch also has a relatively nice API and is easier to debug compared to some competing frameworks. If I need something beyond what spaCy can do, this library is usually what I turn to.

sci-kit learn

I tend to use scikit-learn as more of a support library since I don’t often use the traditional machine learning algorithms it excels in (for example, random forests or support vector machines). More often than not, I’m using it for a bag-of-words algorithm, such as cosine similarity, its preprocessing capabilities, or its excellent support for model performance metrics.

Prodigy

I’ve tried a variety of tools to annotate training data because none of them check all the boxes I have in mind. I have yet to find a clear winner, but Prodigy is the one I’ve almost exclusively switched to these days. Its main weakness for me is it can be difficult to review, and virtually impossible to correct, annotations you’ve made in the past. Allowing multiple annotators is often not easy either. The interface itself works well and it was written by the same group that maintains spaCy so it integrates well with that library.

Structured Data

Polars

Dataframes are tabular data structures that are commonly used to hold a wide variety of row/column data. For working with dataframes in Python, I vastly prefer Polars over the more traditional Pandas (see below). It’s blazingly fast, has a very well designed API, and has been gaining a lot of traction recently. I have a variety of tools and systems I’ve developed for use when doing consulting work and I’ve migrated all of them from Pandas to Polars.

Pandas

Pandas can be extremely slow, although it is improving with the recent addition of support for PyArrow. But my biggest complaint is that I’ve been using it for years and still get tripped up by it’s API—there seem to be so many little inconsistencies and unpredictable behaviors, probably a result of it being initially released quite a few years ago.

On the other hand, there’s a ton of great information and help available because of its popularity and it still has superior support for time series data. It also has much broader native compatibility and support from other libraries (although once again, this is starting to change due to Arrow support). On the bright side, I can do almost all my coding in Polars and switch to Pandas as needed with a quick to_pandas() call.

PostgreSQL

I’ve used many databases over the years, but if I need to install a DBMS, it’s almost certainly going to be PostgreSQL. It’s very powerful and I basically never find myself needing something it can’t do. I really appreciate the support they’ve added for JSON data types. Despite all the power, it remains easy to install and manage while keeping your data safe. I also like MySQL, but it doesn’t offer me anything that PostgreSQL doesn’t.

SQLite

I said above that I prefer PostgreSQL, when I “need to install” a database system. For smaller use cases, you frequently don’t need a full-fledged DBMS and would prefer something light and easy to manage while still having full access to SQL queries. In these situations, I’m a big fan of SQLite. You don’t need to install anything other than the library itself and the entire database is a single file stored on the filesystem. It keeps getting more powerful over time and can be surprisingly fast given it’s simplicity. I love that you can make a copy of the database by simply duplicating a file.

MongoDB

Despite the dramatic shift to NoSQL databases—and, arguably, the subsequent move back—most data is fundamentally relational, and doing the hard work of organizing it up front makes your life so much easier later. That said, there are still some situations where NoSQL is a good fit. For example, I frequently use MongoDB as a cache for parsed documents where I can store the underlying text and all of its related metadata for fast search and retrieval.

Web Development

Svelte

Of the approximately 1 billion JavaScript front-end frameworks, I really like Svelte, which I feel addresses a lot of my complaints about earlier frameworks. The code you write is very powerful and performant while being easy to read and intuitive. It makes writing web-based applications so much easier and enjoyable than most other alternatives.

Svelte on the front-end pairs well with SvelteKit on the back-end for an even better combination.

Tailwind CSS

Cascading Style Sheets (CSS) is a powerful tool for making web pages look exactly how you want. In theory, a primary advantage is you can separate the content of a page from its presentation and apply that presentation style across your website. In practice, site-wide CSS becomes extremely difficult to manage.

Tailwind CSS accepts this reality and instead of trying to separate the content and presentation, makes it easier to manage both of them together. Complete control over the appearance of your content becomes much easier to implement and maintain.

Quarto

This website is hosted on Cloudflare using Quarto. Quarto makes it easy to write everything in simple Markdown text files that can be converted to great-looking websites, PDFs, and more. Full-length journal articles are supported. I used Hugo for years, but Quarto has far fewer breaking changes between releases and gives me much more flexibility over the output.

Development Environment

Neovim

I started using the vi editor during my computer science undergrad. It was painfully slow at first, but now I can jump around documents and make edits so quickly it makes all other editors feel sluggish in comparison. It’s completely customizable, and I mean completely. It works fairly well for long-form writing, but for coding, it’s second to none.

There are (at least) a few caveats:

- Configuration can be a pain and requires you to actively maintain it, so you need to be willing or enjoy optimizing your setup. On the other hand, LazyVim and a few other emerging alternatives have made this substantially easier.

- There is technically a GUI, but vi was originally designed for terminals when it was released in 1979, and I find it cumbersome to use outside of a terminal.

- There are Windows and Mac versions, but the primary target platform seems to be Linux.

There are two main variants of vi available these days; the one I use is Neovim.3. I use it for everything possible.

3 The other is vim, but for many people, it’s hard to find compelling reasons to prefer it over Neovim at this point

This all sounds like a pain, right? It is, but it’s worth it for a lot of people, including myself. You get to feel like a ninja while editing code.

But as much as I love vi, it’s not the right choice for most people. I’ve heard good things about VSCode, the most popular code editor in the world. I really like RStudio for R development, and that apparently has Python support now, so it may be worth a try.

Yes! Even if you’re interested in vi as an editor, it’s a lot to take on at once. The most powerful feature of vi is the ability to move around and edit text without ever taking your hands off the keyboard. This power comes from it being a modal editor, meaning that the same keys can do multiple things depending on the mode you’re in.

You can get a lot of the benefits of vi without the overhead by using vi keybindings in a more traditional editor. VSCode, RStudio, and even many non-programming-oriented editors support these keybindings.

Markdown

I’ve used many different personal organizers over the years: Evernote, OneNote, Notion, and on and on. In every case, I inevitably feel I need to move on as the package stops suiting my needs. Then there’s a long process of migrating all my notes to a new system. Well, I’ve finally solved the problem once and for all!

Markdown is a plain text format that can be converted into a prettier version (this website is written completely in Markdown and converted to HTML with Quarto). I’m confident that plain text files will be around 100+ years from now. The format is easy to read even if you haven’t “prettified” it.

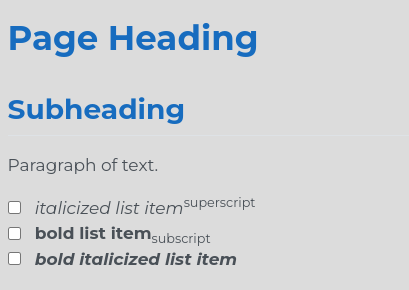

For example, the following Markdown:

# Page Heading

## Subheading

Paragraph of text.

- [ ] *italicized list item*^superscript^

- [ ] **bold list item**~subscript~

- [ ] ***bold italicized list item***renders to:

Markdown is becoming ubiquitous. I do wish it was a bit more standardized since there are multiple variations of the language, but that’s rarely a big problem. I already mentioned using Quarto to convert Markdown to this website. I also use Obsidian if I want a graphical front-end for it. I write technical notes in Markdown which GitHub renders on their website.

Here’s to never migrating again!

Linux

I’ve been using Linux for many years and work inside of the terminal as much as possible. Using the command-line is certainly awkward at first, but gets easier with time. Configuring and administrating a Linux machine has gotten much easier over time, but admittedly still requires some time and patience.

As a command-line user, the biggest benefit comes from Linux having many small utilities that are each good at one narrow job but can be composed into powerful tool chains. For example, given the following input file:

input.txt

Command line is sometimes spelled with a dash, like "command-line", or even combined into one word, like "commandline".

The plural of "command line" is "command lines" or "commandlines".Running the following (admittedly cryptic-looking at first) command.

$ grep -i -o -E "\bcommand[ -]*line\b" test.txt |wc -l

4Tells us there are four occurrences of the singular form of “command line”, allowing for multiple optional spaces or dashes between the two words, allowing any lower and upper case combination, but not matching the plural form.

The command is composed of the following:

grep: Search for the"pattern"in a file-o: And output each occurrence of the match on a new line-i: And match regardless of case (e.g., “Command”, “command”)-E: And treat the “pattern” as an extended regular expression|: Then treat the output of that command as the input to the next commandwc -l: Which counts the number of matching lines

“Linux” refers to a part of the system called the kernel which is mostly useless on it’s own. The kernel is packaged with numerous supporting tools called a distribution. There are many available distributions, but I regularly use Fedora, Ubuntu, and Pop!_OS.4 The easiest way to try out Linux to see what you think is to put the distribution on a flash drive and boot from that. No permanent changes to your computer are needed.

4 Fedora is the most up-to-date, Ubuntu is the most stable, and Pop!_OS has a very friendly graphical interface—you don’t always have to live in the terminal!

Proxmox VE

Proxmox VE is a hypervisor, meaning that it hosts multiple virtual machines. You install Proxmox (based on Ubuntu Linux) as the main operating system on your computer. You then create virtual computers that run inside Proxmox, each of which is a completely independent computer with its own operating system installation but which shares the hardware of the host computer.

You might have 2 copies of Windows, a macOS computer, and several Linux computers all running on one physical box. It’s then very easy to start and stop them, reinstall them, and make copies of them. If you have multiple hosts running Proxmox, you can even move the virtual machines from one physical computer to another.

There are other hypervisors, but Proxmox works well and is fairly easy to manage. Overall, virtualization makes it much easier to manage and experiment with many different machines without the corresponding hardware costs.

Docker / Podman

Docker is a containerization tool, which is kind of like a lighter-weight version of the virtualization provided by hypervisors. Instead of multiple operating systems running on one computer, you have multiple containers running on one operating system. Each container is isolated from the others, but not to the same extent as a virtual machine.

Docker is the most popular containerization tool, but I’ve been using Podman as a drop-in replacement for Docker due to some improvements in security (due to rootless containers) and the fact that it doesn’t require a daemon to be running all the time.

Hardware

Monitor

I stopped using multiple monitors once I discovered large, curved monitors. No seam down the middle and they take up less space on the desk. Linux window managers make it easy to tile windows and efficiently manage multiple workspaces, making one monitor easy to use for maximum productivity. I specifically use an LG UltraGear QHD 34-Inch monitor (Model 34GP83A-B).

Keyboard

I have a ZSA Voyager that is optimized for coding. For long-form writing, I usually switch to a keyboard from Keychron. I specifically use a K10, but every keyboard I’ve tried from them is great, and reasonably priced.